Providing feedback on Red Hat documentation

If you have a suggestion to improve this documentation, or find an error, you can contact technical support at https://access.redhat.com to open a request.

1. Terraform integration

1.1. About the Terraform integration

Learn about the supported integrations between IBM HashiCorp products and Red Hat Ansible Automation Platform, the integration workflows, and migration paths to help determine the best options for your environment.

1.1.1. Introduction

Many organizations find themselves using both Ansible Automation Platform and Terraform Enterprise or HCP Terraform, recognizing that these can work in harmony to create an improved experience for developers and operations teams.

While Terraform Enterprise and HCP Terraform excel at Infrastructure as Code (IaC) for provisioning and de-provisioning cloud resources, Ansible Automation Platform is a versatile, all-purpose automation solution ideal for configuration management, application deployment, and orchestrating complex IT workflows across diverse domains.

This integration directly addresses common challenges such as managing disparate automation tools, ensuring consistent configuration across hybrid cloud environments and accelerating deployment cycles. By bringing together Terraform’s declarative approach to infrastructure provisioning with Ansible Automation Platform’s procedural approach to configuration and orchestration, users can achieve:

-

Optimized costs: Reduce cloud waste, minimize manual processes, and combat tool sprawl. This integration can lead to a significant reduction in infrastructure costs and a high return on investment.

-

Reduced risk: Lower the risk of breaches, enforce policies, and significantly decrease unplanned downtime. The ability to review Terraform plan output before applying it in a workflow, with approval steps, enhances security and compliance.

-

Faster time to value: Boost developer productivity and deploy new compute resources more rapidly, leading to a faster time to market. This is achieved through unified lifecycle management and automation for Day 0 (provisioning), Day 1 (configuration), and Day 2 (ongoing management) operations.

By enabling direct calls between Ansible Automation Platform and Terraform Enterprise or HCP Terraform, organizations can unlock time to value by creating combined workflows, reduce risk through enhanced product integrations, and enhance Infrastructure-as-Code with Ansible Automation Platform content and practices. This allows for unified lifecycle management, enabling tasks from initial provisioning and configuration to ongoing health checks, incident response, patching, and infrastructure optimization.

1.1.2. Integration workflows

Depending on your existing setup, you can integrate these products from Ansible Automation Platform or from Terraform. Migration paths are provided for community users and for migrating from the cloud.terraform collection to hashicorp.terraform.

Ansible-initiated workflow

Ansible automation hub collections allow Ansible Automation Platform users to leverage the Terraform Enterprise or HCP Terraform provisioning capabilities.

hashicorp.terraform collection

This collection provides API integration between Ansible Automation Platform and Terraform Enterprise or HCP Terraform. This solution works natively with Ansible Automation Platform and reduces setup complexity because it doesn’t require a binary installation and it includes a default execution environment.

cloud.terraform collection

This collection provides CLI integration between Ansible Automation Platform and Terraform Enterprise or HCP Terraform. To use this collection, you must install a binary and create an execution environment.

Although this collection is supported, we recommend using the hashicorp.terraform collection instead to take advantage of its API capabilities.

Migration workflows

Community edition users can migrate to Terraform Enterprise or HCP Terraform, and then integrate the Ansible Automation Platform capabilities using the cloud.terraform (CLI) collection. However, we recommend using the hashicorp.terraform (API) collection instead.

If you are already using the cloud.terraform collection, you can migrate to hashicorp.terraform.

Terraform-initiated workflow

For existing Terraform Enterprise or HCP Terraform users, Terraform can directly call Ansible Automation Platform at the end of provisioning for a more seamless and secure workflow. This enables Terraform Enterprise or HCP Terraform users to enhance their immutable infrastructure automation with Ansible Automation Platform Day 2 automation capabilities and manage infrastructure updates and lifecycle events.

1.2. Integrating from Ansible Automation Platform

As an administrator, you configure the integration from Ansible Automation Platform user interface. Use the procedures related to the collection you have installed.

1.2.1. Authenticating to hashicorp.terraform

After installing or migrating to hashicorp.terraform, users must create credentials to use with job templates in Ansible Automation Platform.

Creating a credential

Users must create a credential to use with job templates in Ansible Automation Platform.

-

You must have a Terraform API token.

-

Log in to Ansible Automation Platform.

-

From the navigation panel, select , and then select Create credential.

-

From the Credential type list, select the HCP Terraform credential type.

-

In the Token field, enter the Terraform API token.

-

(Optional) Edit the Description field and select the TF organization from the Organization list.

-

Click Save credential. You are ready to use the credential in a job template.

1.2.2. Integrating with cloud.terraform

When you integrate with cloud.terraform, you must create a credential, build an execution environment, and launch a job template in Ansible Automation Platform.

Creating a credential

You can set up credentials directly from the Ansible Automation Platform user interface. The credentials are provided to the execution environment and Ansible Automation Platform reads them from there. This eliminates the need to manually update each playbook.

-

You must have a Terraform API token.

-

Install the certified

cloud.terraformcollection from automation hub. (You need an Ansible subscription to access and download collections on automation hub.)

-

Log in to Ansible Automation Platform.

-

From the navigation panel, select .

-

Click Create credential type. The Create Credential Type page opens and displays the Details tab.

-

For the Credential Type, enter a name.

-

In the Input configuration field, enter the following YAML parameter and values:

fields: - id: token type: string label: token secret: true -

In the Injector configuration field, enter the following configuration.

-

For Terraform Enterprise, the hostname is the location where you have deployed TFE:

env: TF_TOKEN_<hostname>: ‘{{ token }}’ -

For HCP Terraform, use:

env: TF_TOKEN_app_terraform_io: ‘{{ token }}’

-

-

To save your configuration, click Create Credential Type again. The new credential type is created.

-

To create an instance of your new credential type, select page, and select Create credential.

-

From the Credential type, select the name of the credential type you created earlier.

-

In the Token field, enter the Terraform API token.

-

(Optional) Edit the Description and select the TF organization from the Organization list.

-

Click Save credential.

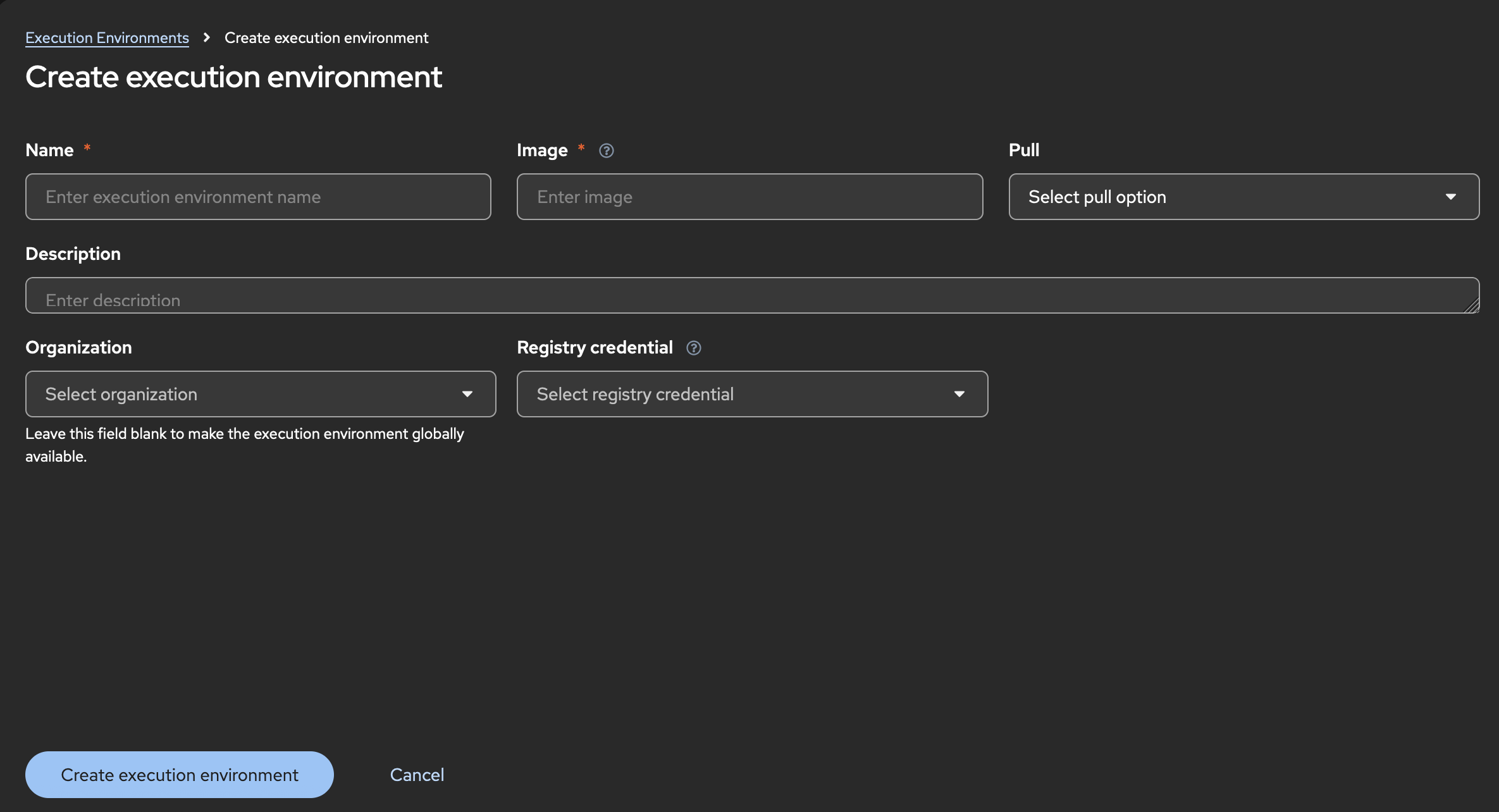

Building an execution environment in Ansible Automation Platform

You must build an execution environment using the automation controller so that Ansible Automation Platform can provide the credentials necessary for using its automation features.

-

You need a pre-existing execution environment with the latest version of

cloud.terraformcollection before you can create it using an automation controller. You cannot use the default execution environment provided by Ansible Automation Platform because the default environment does not include theterraformCLI binary.NoteIf you have migrated from Terraform Community Edition, you can continue to use your existing execution environment and update it to the latest version of

cloud.terraform. -

Install the

terraformCLI binary in your pre-existing execution environment. See Additional resources below for a link to the binary.

-

From the navigation panel, select .

-

Click Create execution environment.

-

For Name, enter a name for your Ansible Automation Platform execution environment.

-

For Image, enter the repository link to the image for your pre-existing execution environment.

-

Click Create execution environment. Your newly added execution environment is ready to be used in a job template.

Creating and launching a job template

Create and launch a job template to complete the integration and use the automation features in Ansible Automation Platform.

-

From the navigation panel, select .

-

Select Create template > Create Job Template.

-

From the Execution Environment list, select the environment you created.

-

From the Credentials list, select the credentials instance you created previously. If you do not see the credentials, click Browse to see more options in the list.

-

Enter any additional information for the required fields.

-

Click Create job template.

-

Click Launch template.

-

To launch the job, click Next and Finish. The job output shows that the job has run.

To see that the job has run successfully from the Terraform user interface, select Workspaces > Ansible-Content-Integration > Run. The Run list shows the state of the Triggered via CLI job. You can see it go from the Queued to the Plan Finished state.

1.3. Migrating from other versions

Migrate from earlier collections or community editions to use the most advanced features of the HashiCorp and Ansible Automation Platform integrations.

1.3.1. Migrating from cloud.terraform to hashicorp.terraform

If you are using the existing cloud.terraform (CLI-based) collection, you can migrate your existing playbooks to the hashicorp.terraform (API-based) collection. The main modules for hashicorp.terraform that you must configure are hashicorp.terraform.configuration_version and hashicorp.terraform.run.

Configuring the hashicorp.terraform.configuration_version module

To migrate to the hashicorp.terraform collection, you must configure the hashicorp.terraform.configuration_version module. This module manages configuration versions in Terraform Enterprise or HCP Terraform.

-

Install the Ansible Automation Platform certified

hashicorp.terraformcollection. -

Verify that a valid organization and workspace are correctly set up in Terraform Enterprise or HCP Terraform.

-

Replicate your automation tasks from the

cloud.terraformmodules.Example

- name: Create configuration version with auto_queue_runs to false hashicorp.terraform.configuration_version: workspace_id: ws-1234 configuration_files_path: "/usr/home/tf" auto_queue_runs: false tf_validate_certs: true poll_interval: 3 poll_timeout: 15 state: present

-

Configure the following required parameters:

-

workspace_idorworkspace+organization: The workspace ID or the workspace name and organization where the configuration version will be created and the file will be uploaded (forstate: present). -

configuration_files_path: The path where the required Terraform Enterprise or HCP Terraform files will be uploaded to create a configuration version (forstate: present). The module accepts two file types forconfiguration_files_path:-

Directory: Any folder containing Terraform Enterprise or HCP Terraform files. The module auto-creates the .tar.gz file from all contents recursively.

-

.tar.gz Archive: Pre-compressed gzip tarball. The module validates TAR format and gzip compression.

-

-

configuration_version_id: The configuration version ID that will be archived (state: archived). This action deletes the associated uploaded .tar.gz file. Note the following:-

Only uploaded versions that were created using the API or CLI, have no active runs, and are not the current version for any workspace can be archived.

-

When the

configuration_version_idis unspecified, Terraform Enterprise or HCP Terraform selects the latest approvedconfiguration_version_idin the workspace.

-

-

auto_queue_runs: Determines if Terraform Enterprise or HCP Terraform automatically starts a run after the configuration upload (

trueby default) or requires manual initiation (false).

-

-

Set additional optional parameters as needed.

Configuring the hashicorp.terraform.run module

The hashicorp.terraform.run module lets you manage Terraform Enterprise or HCP Terraform runs using create, apply, cancel, and discard operations. You can trigger plans or apply operations on specified workspaces with customizable settings.

-

Ensure that a valid Terraform API token is properly configured to authenticate with your Terraform Enterprise or HCP Terraform environment.

-

Verify that a valid organization and workspace are correctly set up in Terraform Enterprise or HCP Terraform.

-

Create a run module.

Example

- name: Create a destroy run with auto_apply hashicorp.terraform.run: workspace_id: ws-1234 run_message: "destroy vpc" state: "present" tf_token: <your token> is_destroy: true auto_apply: true target_addrs: - "aws_vpc.vpc1" - "aws_vpc.vpc2" poll: true poll_interval: 10 poll_timeout: 30 -

Configure the following required parameters:

-

workspace_idorworkspace+organization: The workspace ID or the workspace name and organization where the configuration version will be created and the file will be uploaded (forstate: present). -

run_id: The unique identifier of the run to apply, cancel, or discard operations. -

tf_token: The Terraform API authentication token. If this value is not set, theTF_TOKENenvironment variable is used.

-

-

(Optional) Configure the built-in polling options that determine the wait period for Terraform Enterprise or HCP Terraform operations to complete:

-

poll: true: (Default) Checks the run status everypoll_intervalseconds (default: 5s) until completion orpoll_timeout(default: 25s) is reached, returning the final status. -

poll: false: Returns immediately after initiating the run without waiting.

-

-

Set additional optional parameters as needed.

Migration examples for hashicorp.terraform modules

These before and after examples help users understand how the modules can be configured in a real world environment.

Example 1: Plan Only

-

Before (

cloud.terraform.terraform):

- name: Create a plan file using check mode

cloud.terraform.terraform:

force_init: true

project_path: "/usr/home/tf"

plan_file: "/usr/home/tf/terraform.tfplan"

state: present

check_mode: true

check_destroy: true

variables:

environment: prod

-

After (

hashicorp.terraform.*):-

The

configuration_versionmodule:- name: Create configuration version with auto_queue_runs to false hashicorp.terraform.configuration_version: workspace_id: ws-1234 configuration_files_path: "usr/home/tf_files" auto_queue_runs: false tf_validate_certs: true poll_interval: 5 poll_timeout: 10 state: present

-

The

plan_onlyrun with the run module:- name: Create a plan only run with variables hashicorp.terraform.run: workspace_id: ws-1234 run_message: "plan-only vpc creation" poll: false state: "present" tf_token: "{{ tfc_token }}" plan_only: true variables: - key: "env" value: "production"

-

Example 2: Plan and apply

-

Before (

cloud.terraform.terraform):-

Generate the plan:

- name: Plan and Apply Workflow - Step 1 - Generate Plan cloud.terraform.terraform: force_init: true project_path: "/usr/home/tf" plan_file: "/usr/home/tf/workflow.tfplan" state: present check_mode: true variables: environment: prod -

Apply the plan:

- name: Plan and Apply Workflow - Step 2 - Apply Plan cloud.terraform.terraform: project_path: "/usr/home/tf" plan_file: "/usr/home/tf/workflow.tfplan" state: present

-

-

After (

hashicorp.terraform.run):-

The

configuration_versionmodule:- name: Create configuration version with auto_queue_runs to false hashicorp.terraform.configuration_version: workspace_id: ws-1234 configuration_files_path: "usr/home/tf_files" auto_queue_runs: false tf_validate_certs: true poll_interval: 5 poll_timeout: 10 state: present

-

The run module with two options for plan and apply workflow:

-

-

Option 1: Uses the

auto_applyparameter to handle both the plan and apply workflows:- name: Create a run with auto_apply hashicorp.terraform.run: workspace_id: ws-1234 run_message: "destroy vpc" state: "present" tf_token: "{{ tfc_token }}" auto_apply: true poll: true poll_interval: 10 poll_timeout: 30 -

Option 2: Uses two sub-steps to create a

save_planrun and then apply it:-

Create the plan:

- name: Create a save plan run hashicorp.terraform.run: workspace_id: ws-1234 run_message: "save plan vpc creation" state: "present" tf_token: "{{ tfc_token }}" poll: true poll_interval: 10 poll_timeout: 30 save_plan: true -

Apply the plan. You get the

run_idfrom the output of the run module task:- name: Apply the save plan run hashicorp.terraform.run: run_id: run-1234 state: "applied" tf_token: "{{ tfc_token }}" poll: true poll_interval: 10 poll_timeout: 30

-

1.3.2. Migrating from Terraform Community Edition

If you want to use Ansible Automation Platform with Terraform Enterprise (TFE) or HCP Terraform and you are currently using Terraform Community Edition (TCE), you must migrate to TFE or HCP Terraform and then update Ansible Automation Platform configurations to work with TFE or HCP Terraform.

Migrating from the community edition

When you migrate from TCE to TFE or HCP Terraform, you are not migrating the collection itself. Instead, you are adapting your existing TCE usage to work with TFE or HCP Terraform.

After you migrate, you must update the Ansible Automation Platform credentials, execution environment, and job templates.

|

Note

|

The |

-

Use the latest supported version of Terraform (1.11 or higher).

-

Follow the

tf-migrateCLI instructions under Additional resources below. -

Ensure that the HCP Terraform or TFE workspace is not set to automatically apply plans.

-

To prevent errors when running playbooks against TFE or HCP Terraform, do the following actions before running a playbook:

-

Confirm that the Terraform version in the execution environment is the same as the version stated in TFE or HCP Terraform.

-

Perform an initialization in TFE or HCP Terraform:

terraform init

-

If you have a local state file in your execution environment, delete the local state file.

-

Get a token from HCP Terraform or Terraform Enterprise, which you will use to create the credential in a later step. Ensure the token has the necessary permissions based on the team or user token to execute the desired capabilities in the playbook.

-

Remove the backend config and files from your playbook definition.

-

Add the workspace within the default setting in your TF config or an environment variable if you want to define the workspace outside updating the playbook itself.

NoteYou can add the workspace to your playbook to scale your workspace utilization.

-

-

From the Ansible Automation Platform user interface:

-

(Optional) After the migration is completed and verified, you can run the additional modules and plugins from the collection in your execution environment:

1.4. Integrating from Terraform

If you have already provisioned your environment from Terraform Enterprise, you can use the Terraform official provider to leverage Ansible Automation Platform automation capabilities.

1.4.1. Configuring the provider

You must configure the provider to allow Terraform to reference and manage a subset of Ansible Automation Platform resources.

The provider configuration belongs in the root module of a Terraform configuration. Child modules receive their provider configurations from the root module.

-

You have installed and configured Terraform Enterprise or HCP Terraform.

-

You have installed the latest release version of

terraform-provider-aapfrom the Terraform registry.NoteThe default latest version on the Terraform registry might be a pre-release version (such as 1.2.3-beta). Select a supported release version, which uses a 1.2.3 format without dashes.

-

You have created a username and password or an API token for Ansible Automation Platform. Environment variables are also supported.

NoteToken authentication is recommended because users can manage tokens for specific integrations (such as Terraform), limit token access, and have full control over token lifecycle.

-

Create a Terraform configuration (

.tf) file. Include aproviderblock. The name given in the block header is the local name of the provider to configure. This provider should already be included in arequired_providersblock.Example

# This example creates an inventory named `My new inventory` # and adds a host `tf_host` and a group `tf_group` to it, # and then launches a job based on the "Demo Job Template" # in the "Default" organization using the inventory created. # terraform { required_providers { aap = { source = "ansible/aap" } } } provider "aap" { host = "https://AAP_HOST" token = "my-aap-token" # Do not record credentials directly in the Terraform configuration. Provide your token using the AAP_TOKEN environment variable. } resource "aap_inventory" "my_inventory" { name = "My new inventory" description = "A new inventory for testing" organization = 1 variables = jsonencode( { "foo" : "bar" } ) } resource "aap_group" "my_group" { inventory_id = aap_inventory.my_inventory.id name = "tf_group" variables = jsonencode( { "foo" : "bar" } ) } resource "aap_host" "my_host" { inventory_id = aap_inventory.my_inventory.id name = "tf_host" variables = jsonencode( { "foo" : "bar" } ) groups = [aap_group.my_group.id] } data "aap_job_template" "demo_job_template" { name = "Demo Job Template" organization_name = "Default" } # In order for passing the inventory id to the job template execution, the Inventory on the job template needs to be set to "prompt on launch" resource "aap_job" "my_job" { inventory_id = aap_inventory.my_inventory.id job_template_id = aap_job_template.demo_job_template.id # This resource creation needs to wait for the host and group to be created in the inventory depends_on = [ aap_host.my_host, aap_group.my_group ] } -

Add the configuration arguments, as shown in the previous example. You must configure the host and credentials. A full list of supported schema is available on the Terraform registry for your

aapprovider release version.-

host: (String) AAP server URL. Can also be configured using theAAP_HOSTenvironment variable. -

insecure_skip_verify: (Boolean) Iftrue, configures the provider to skip TLS certificate verification. Can also be configured by setting theAAP_INSECURE_SKIP_VERIFYenvironment variable. -

password: (String, Case Sensitive) Password to use for basic authentication. Ignored if the token is set. Note that hardcoded credentials are not recommended for security reasons. It is a best practice to use theAAP_PASSWORDenvironment variable instead. -

timeout: (Number) Timeout specifies a time limit for requests made to the AAP server. Defaults to 5 if not provided. A timeout of zero means no timeout. Can also be configured by setting theAAP_TIMEOUTenvironment variable. -

token: (String, Case Sensitive): Token to use for token authentication. Note that hardcoded credentials are not recommended for security reasons. It is a best practice to use theAAP_TOKENenvironment variable instead. -

username: (String) Username to use for basic authentication. Ignored if the token is set. Can also be configured by setting theAAP_USERNAMEenvironment variable.

-

-

(Optional) You can use expressions in the values of these configuration arguments, but can only reference values that are known before the configuration is applied.

-

(Optional) You can also use an

aliasmeta-argument that is defined by Terraform and is available for all provider blocks.aliaslets you use the same provider with different configurations for different resources.

1.4.2. Using TF Actions and Ansible Automation Platform

Use Terraform (TF) Actions with Ansible Automation Platform to trigger automated configuration after your infrastructure is provisioned.

About Terraform Actions and Ansible Automation Platform

The Terraform (TF) Actions adds an imperative action block to the HCL language, letting you execute steps after infrastructure is provisioned without leaving the declarative Terraform workflow. This keeps the entire infrastructure and configuration process visible in your Terraform configuration.

TF Actions can be used to trigger Ansible automation for configuration management, such as sending an event and payload to Ansible Automation Platform to configure newly provisioned virtual machines.

There are two actions implemented with the Terraform provider for Ansible Automation Platform:

-

Launch a job directly: Runs the job as a direct, immediate execution request to Ansible Automation Platform. You must explicitly define which specific Ansible Automation Platform job template the TF Action should call.

-

Use Event-Driven Ansible: Sends an event to Ansible Automation Platform, which then uses rulebooks to intelligently decide which playbook to run based on the event’s payload. This allows for more dynamic, scalable and reactive automation.

Using TF Actions as a direct job

When you use TF Actions to launch jobs directly with Ansible Automation Platform, the process is streamlined and sequential.

The benefit of this approach is a clean, predictable state: the Ansible job launches during the Terraform apply cycle, and Terraform receives a clear, binary status. Note that each change launches a separate job with identical configuration.

This method can be useful when you want to execute Ansible automation against newly provisioned servers. For example, last mile provisioning or applying a routine security patching job on a new host.

-

You have configured the AAP Terraform provider to authenticate with Ansible Automation Platform.

-

You have configured the AWS Terraform provider to authenticate with Amazon Web Services.

NoteThe example below uses Amazon Web Services (AWS) and requires an AWS account that might incur charges. You can adapt the pattern to use a different cloud provider.

-

You have job templates configured with:

-

Inventory set to prompt on launch.

-

A machine credential (private key) matching a public key available in a local file.

-

-

Define the

aap_job_launchaction in your*.tffile. -

Add a lifecycle job block to define which action will be invoked during the proper lifecycle event trigger.

Example

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 6.0"

}

aap = {

source = "ansible/aap"

version = "~> 1.4.0"

}

}

}

provider "aap" {

# Configure authentication as needed.

}

provider "aws" {

region = "us-west-1"

# Configure authentication as needed.

}

variable "public_key_path" {

type = string

description = "Local path to a public key file to inject into the VM. Your AAP Job Template must have the matching private key configured as a machine credential."

}

resource "aws_key_pair" "key_pair" {

key_name = "aap-terraform-actions-demo-key"

public_key = file(var.public_key_path)

}

data "aws_ami" "rhel_ami" {

most_recent = true

filter {

name = "name"

values = ["RHEL-9*"]

}

filter {

name = "virtualization-type"

values = ["hvm"]

}

owners = ["309956199498"] # Red Hat

}

resource "aws_instance" "instance" {

ami = data.aws_ami.rhel_ami.id

instance_type = "t2.micro"

associate_public_ip_address = true

key_name = aws_key_pair.key_pair.key_name

}

# Look up Organization ID

data "aap_organization" "organization" {

name = "Default"

}

# Create an inventory

resource "aap_inventory" "inventory" {

name = "Actions Demo Inventory"

organization = data.aap_organization.organization.id

}

data "aap_job_template" "job_template" {

name = "Demo Job Template"

organization_name = data.aap_organization.organization.name

}

#

# Direct job launch action example

#

resource "aap_host" "host" {

inventory_id = aap_inventory.inventory.id

name = resource.aws_instance.instance.public_ip

# Setting a value of 10 for SSH retries because terraform will mark the

# instance 'created' before it is ready to accept connections from Ansible.

variables = jsonencode(

{

"ansible_ssh_retries" : 10

}

)

# Configure a job launch after the host is created in inventory

lifecycle {

action_trigger {

events = [after_create]

actions = [action.aap_job_launch.job_launch]

}

}

}

action "aap_job_launch" "job_launch" {

config {

inventory_id = aap_inventory.inventory.id

job_template_id = data.aap_job_template.job_template.id

wait_for_completion = true

}

}

-

(Required) Change the job template name and the inventory name in this example to your corresponding variables.

-

(Optional) You can set

ownersto the Red Hat RHEL image ID so that the latest image is used each time the job runs. -

(Optional) Set additional parameters as needed. For example, you can set

wait_for_completiontotrue, then Terraform will wait until this job is created and reaches any final state before continuing. You can also setwait_for_completion_timeout_secondsto control the timeout limit. -

Update and commit the Terraform code.

-

Execute the Terraform plan and apply it.

Using TF Actions with Event-Driven Ansible

Event-Driven Ansible is an automation feature that allows Ansible Automation Platform to react to real-time events, instead of being triggered on a schedule or by a manual request.

Configuring an event stream

To use TF Actions with Event-Driven Ansible, you must first configure the event stream in Ansible Automation Platform. TF actions will post events to this stream.

-

You have configured the AAP Terraform provider to authenticate with Ansible Automation Platform.

-

You have configured the AWS Terraform provider to authenticate with Amazon Web Services.

NoteThe example below uses Amazon Web Services (AWS) and requires an AWS account that might incur charges. You can adapt the pattern to use a different cloud provider.

You have an Ansible Automation Platform inventory named EDA Actions Demo Inventory in the Default organization.

You have job templates configured with:

-

Inventory set to EDA Actions Demo Inventory.

-

A machine credential (private key) matching a public key available in a local file.

-

Log in to the Ansible Automation Platform user interface.

-

Navigate to .

-

Click Create event stream.

-

On the Create event stream page, edit the fields:

-

Name: A descriptive, unique name for your event stream (such as

Terraform provider_Events). -

Organization: Select the organization this event stream will belong to (usually

Defaultor your specific organization). -

Event stream type: Select the type that matches how you want to receive events. Basic Event Stream (username/password) is supported with this integration.

-

Credential: Select a credential that you have pre-created for authentication with this event stream.

-

Headers: (Optional) Enter comma-separated HTTP header keys that you want to include in the event payload that gets forwarded to the rulebook. Leave this empty to include all headers.

-

Forward events to rulebook activation: This option is typically enabled by default. Disabling it is useful for testing and diagnosing your connection and incoming data without inadvertently triggering any automation.

-

-

Click Create event stream. Then navigate to to verify the event stream was created and see the number of events received so far.

You can also click on the specific stream to see its detailed configuration, including the organization, event stream type, associated credential, and event forwarding settings.

-

Set up a rule book activation. Make sure to:

-

Add the event stream to the rulebook.

-

(Recommended) Select the Rulebook activation enabled? option to automatically start the activation after creation.

-

Activate the rulebook.

-

-

Select to verify that the rulebook is active and check its status.

Configuring TF Actions

To connect the event stream to Terraform actions, you configure the main TF file (*.tf) in Terraform.

-

Add a lifecycle block to call the Event-Driven Ansible event stream to your

*.tffile. Theafter_createaction will trigger theaction.aap_eda_eventstream_post.create.Example

The following example shows a

lifecycleblock added to the provisioning of an AWS EC2 server. After the new server is provisioned, the action runs.terraform { required_providers { aws = { source = "hashicorp/aws" version = "~> 6.0" } aap = { source = "ansible/aap" version = "~> 1.4.0" } } } provider "aap" { # Configure authentication as needed. } provider "aws" { region = "us-west-1" # Configure authentication as needed. } variable "public_key_path" { type = string description = "Local path to a public key file to inject into the VM. Your AAP Job Template must have the matching private key configured as a machine credential." } variable "event_stream_username" { type = string } variable "event_stream_password" { type = string } resource "aws_key_pair" "key_pair" { key_name = "aap-terraform-actions-demo-key" public_key = file(var.public_key_path) } data "aws_ami" "rhel_ami" { most_recent = true filter { name = "name" values = ["RHEL-9*"] } filter { name = "virtualization-type" values = ["hvm"] } owners = ["309956199498"] # Red Hat } resource "aws_instance" "instance" { ami = data.aws_ami.rhel_ami.id instance_type = "t2.micro" associate_public_ip_address = true key_name = aws_key_pair.key_pair.key_name } # Look up an inventory data "aap_inventory" "inventory" { name = "EDA Actions Demo Inventory" organization_name = "Default" } # # EDA Event launch action example # resource "aap_host" "host" { inventory_id = data.aap_inventory.inventory.id name = resource.aws_instance.instance.public_ip # Configure an EDA eventstream POST after the host is created in inventory lifecycle { action_trigger { events = [after_create] actions = [action.aap_eda_eventstream_post.event_post] } } } data "aap_eda_eventstream" "eventstream" { name = "TF Actions Event Stream" } action "aap_eda_eventstream_post" "event_post" { config { limit = "all" template_type = "job" job_template_name = "Demo Job Template" organization_name = "Default" event_stream_config = { username = var.event_stream_username password = var.event_stream_password url = data.aap_eda_eventstream.eventstream.url } } } -

(Required) Configure the following parameters:

-

event_stream_config: (Attributes) Details for the Event-Driven Ansible event stream. You must include:-

username: (String) Username to use when performing the POST to the event stream URL -

password: (String) Password to use when performing the POST to the event stream URL -

url: (String) URL to receive the event POST

-

-

limit: (String) Ansible Automation Platform limit for job execution -

organization_name: (String) Organization name -

template_type: (String) Template type: eitherjoborworkflow_job

-

-

(Optional) You can set

ownersto the Red Hat RHEL image ID so that the latest image is used each time the job runs. -

(Optional) Set additional parameters as needed.

-

Configure an action integration with event payload specifications and target rulebook mapping.

Example:

- name: Dispatch TF Workflow Job Template Action

condition: event.payload.template_type == "workflow"

throttle:

once_after: 1 minute

group_by_attributes:

- event.payload.workflow_template_name

- event.payload.limit

- event.payload.organization_name

actions:

- debug:

msg: "Executing Workflow Template {{ event.payload.workflow_template_name }}"

- run_workflow_template:

name: "{{ event.payload.workflow_template_name }}"

organization: "{{ event.payload.organization_name }}"

- debug:

msg: "Executed Workflow Job Template {{ event.payload.workflow_template_name }}"

Creating and applying the plan

After you configure your Terraform plan to include Event-Driven Ansible events, you create and apply the plan to trigger the events.

-

Run

terraform initto initialize your working directory. -

Use

terraform planto commit to create the plan. The following example also saves the plan to a file namedtfplan.out, but you can specify any name for the plan. Saving the plan is a best practice for automation because the saved plan is strictly enforced.terraform plan -out=tfplan.out

-

Review the plan output.

-

Apply the saved plan.

terraform apply tfplan.out

This creates and sends an event to the specified event stream. As each resource is created, TF actions are invoked and the corresponding Ansible Automation Platform playbooks are executed sequentially.

-

Verify that the runs are updated in the Terraform user interface. Drill down on a resource to see that the action was invoked and a post event was executed.

-

From the Ansible Automation Platform user interface, verify that the event is successfully received by (EDAName} and triggers the appropriate rulebook activation:

-

Check the Event Streams dashboard to see the TF Actions events were received.

-

Check the Jobs dashboard to see the jobs running sequentially and with a Success status.

-

Check the Inventory dashboard to see the updates. For example, if you created new servers, check the Hosts tab for the Terraform provisioned inventory.

-

Example rulebook

The following rulebook example shows how to use TF actions and Event-Driven Ansible to listen for events on a webhook.

- name: Listen for events on a webhook

hosts: all

## Define our source for events

sources:

- ansible.eda.webhook:

host: 0.0.0.0

port: 5000

filters:

- ansible.eda.insert_hosts_to_meta:

host_path: payload.limit

## Define the conditions we are looking for

rules:

- name: Dispatch TF Job Template Action

condition: event.payload.template_type == "job"

throttle:

once_after: 1 minute

group_by_attributes:

- event.payload.job_template_name

- event.payload.limit

- event.payload.organization_name

actions:

- debug:

msg: "Executing Job Template {{ event.payload.job_template_name }}"

- run_job_template:

name: "{{ event.payload.job_template_name }}"

organization: "{{ event.payload.organization_name }}"

- debug:

msg: "Executed Job Template {{ event.payload.job_template_name }}"

- name: Dispatch TF Workflow Job Template Action

condition: event.payload.template_type == "workflow"

throttle:

once_after: 1 minute

group_by_attributes:

- event.payload.workflow_template_name

- event.payload.limit

- event.payload.organization_name

actions:

- debug:

msg: "Executing Workflow Template {{ event.payload.workflow_template_name }}"

- run_workflow_template:

name: "{{ event.payload.workflow_template_name }}"

organization: "{{ event.payload.organization_name }}"

- debug:

msg: "Executed Workflow Job Template {{ event.payload.workflow_template_name }}"

2. Vault integration

2.1. About the Vault integration

The integration of Red Hat Ansible Automation Platform and IBM HashiCorp Vault provides fully automated lifecycle management for Vault.

2.1.1. Introduction

Vault lets you centrally store and manage secrets securely. The Ansible Automation Platform certified hashicorp.vault collection provides fully automated lifecycle and operation management for Vault. You can create, update, and delete secrets through playbooks.

-

Existing

community.hashi_vaultusers: Thehashicorp.vaultsolution is intended to replace unsupportedcommunity.hashi_vaultcollection. Use the migration path to keep your existing playbooks. For more information about migrating, see Migrating fromcommunity.hashi_vault. -

New Vault users: The

hashicorp.vaultcollection is included in the supported execution environment from automation hub.

|

Note

|

Although the |

2.2. Authenticating to hashicorp.vault

After you install or migrate to the hashicorp.vault collection, authentication is configured in the Ansible Automation Platform user interface. An administrator creates a custom credential type to authenticate to Vault. Then users create credentials to use with job templates.

2.2.1. Authentication architecture

The hashicorp.vault collection manages authentication through environment variables and client initialization. This approach enhances security by preventing sensitive credentials from being passed directly as module parameters within playbook tasks.

The hashicorp.vault collection injects credentials into job templates with environment variables, so you get simpler, cleaner task definitions while ensuring that authentication details remain secure.

The following authentication types are supported:

-

appRole authentication: Use either one of the following methods when using appRole authentication:

-

Set the

VAULT_APPROLE_ROLE_IDandVAULT_APPROLE_SECRET_IDenvironment variables. When you use environment variables, you must also create a custom credential type and credentials that will be passed to the job template. -

Directly pass the

role_idandsecret_idparameters to the tasks, for example:- name: Create a secret with AppRole authentication hashicorp.vault.kv2_secret: url: https://vault.example.com:8200 auth_method: approle role_id: "{{ vault_role_id }}" secret_id: "{{ vault_secret_id }}" path: myapp/config data: api_key: secret-api-key

-

-

Token authentication: Set the

VAULT_TOKENenvironment variable.Optionally, you can configure parameters for the token. If parameters are not provided, then the module uses environment variables.

2.2.2. Creating a custom credential type

As an admin, you create a secure credential type in Ansible Automation Platform, which is used to authenticate to Vault.

You can configure role-based (appRole) authentication or allow users to directly provide a token.

Do one of the following:

-

New users: Install the Ansible Automation Platform certified

hashicorp.vaultcollection from Automation hub. -

community.hashi_vaultcollection users: Migrate fromcommunity.hashi_vault. For more information, see Migrating fromcommunity.hashi_vault.

-

Log in to Ansible Automation Platform.

-

From the navigation panel, select .

-

Click Create a credential type. The Create Credential Type page opens.

-

Enter a name and a description in the corresponding fields.

-

If you want to configure token authentication for individual users:

-

For Input configuration, enter:

fields: - id: vault_token type: string label: Hashicorp Vault Token secret: true

-

For Injector configuration, enter:

env: VAULT_TOKEN: '{{ vault_token }}'

-

-

If you want to configure appRole authentication using

role_idandsecret_id:-

For Input configuration, enter:

fields: - id: vault_approle_role_id type: string label: Hashicorp Vault appRole Role ID secret: true - id: vault_approle_secret_id type: string label: Hashicorp Vault appRole Secret ID secret: true -

For Injector configuration, enter:

env: VAULT_APPROLE_ROLE_ID: '{{ vault_approle_role_id }}' VAULT_APPROLE_SECRET_ID: '{{ vault_approle_secret_id }}'

-

-

Click Create credential type.

2.2.3. Creating a custom credential

Vault users must create a custom credential to use with job templates in Ansible Automation Platform.

-

Your administrator has created a Vault credential type.

-

Log in to Ansible Automation Platform.

-

From the navigation panel, select , and then select Create credential.

-

Enter a name and a description in the corresponding fields.

-

(Optional) From the Organization list, select an organization.

-

From the Credential type list, select a Vault credential type. The fields that display depend on the credential type.

-

Do one of the following:

-

For the token authentication, add your Vault token and edit any fields as needed.

-

For the appRole authentication method, enter the IDs in the appRole Role ID and appRole Secret ID fields. Edit any other fields as needed.

-

-

Click Save credential. You are ready to use the credential in a job template.

2.3. Migrating from community.hashi_vault

If you are using the community.hashi_vault collection, you can migrate your existing playbooks to the hashicorp.vault collection.

2.3.1. Configuring KV1 modules

If you are using KV1 with community.hashi_vault collection, configure the corresponding modules in the hashicorp.vault collection.

Configuring the hashicorp.vault.kv1_secret module

-

Configuring this module is not required for migration because there are no corresponding modules in

community.hashi_vault. However, you might want to configure something other than the defaults forauth_methodandstateafter the migration. You can use the examples on Ansible automation hub for reference.

Configuring the hashicorp.vault.kv1_secret_info module

The hashicorp.vault.kv1_secret_info module reads KV1 secrets.

The corresponding community.hashi_vault modules are:

-

community.hashi_vault.vault_kv1_get: Retrieves secrets from the HashiCorp Vault KV version 1 secret store. -

community.hashi_vault.vault_kv1_get lookup: Retrieves secrets from the HashiCorp Vault KV version 1 secret store.

-

Replicate the

community.hashi_vault modulesto the followinghashicorp.vault.kv1_secret_secret_infoparameters.engine_mount_point: description: KV secrets engine mount point. default: secret type: str aliases: [secret_mount_path] path: description: - Specifies the path of the secret. required: true type: str aliases: [secret_path] extends_documentation_fragment: - hashicorp.vault.vault_auth.modules -

(Required) Configure the

pathparameter. This is the path to the secret in thecommunity.hashi_vault.hashi_vaultmodules. Alias:secret_path -

If needed, configure the optional parameters.

Configuring the hashicorp.vault.kv1_secret_get lookup plugin

The hashicorp.vault.kv1_secret_get lookup plugin module reads KV1 secrets.

The corresponding community.hashi_vault modules are:

-

community.hashi_vault.hashi_vault: Retrieves secrets from HashiCorp Vault. -

community.hashi_vault.vault_kv1_get lookup: Gets secrets from the HashiCorp Vault KV version 1 secret store.

-

Replicate

the community.hashi_vaultmodules to the followinghashicorp.vault.kv1_secret_getparameters.auth_method: description: Authentication method to use. choices: ['token', 'approle'] default: token type: str engine_mount_point: description: - The KV secrets engine mount point. default: secret type: str aliases: ['mount_point', 'secret_mount_path'] secret: description: - The Vault path to the secret being requested. required: true type: str aliases: ['secret_path'] -

(Required) Configure the secret parameter. This maps to secret in the

community.hashi_vault.hashi_vaultmodules. Alias:secret_path -

If needed, configure the optional parameters.

Migration example for the hashicorp.vault.kv1_secret_info module

The following example shows before and after configurations for the hashicorp.vault.kv1_secret_info module.

Example:

Before (community.hashi_vault)

- name: Read a kv1 secret from Vault (community collection)

community.hashi_vault.vault_kv1_get:

url: https://vault:8201

token: "{{ vault_token }}"

path: hello

register: response

After (hashicorp.vault)

- name: Read a kv1 secret from Vault (hashicorp.vault collection)

hashicorp.vault.kv1_secret_info:

url: https://vault.example.com:8201

token: "{{ vault_token }}"

path: sample

Migration example for the hashicorp.vault.kv1_secret_get lookup

The following example shows the KV1 secret get lookup.

Example:

Before (community.hashi_vault)

- name: Retrieve a secret from the Vault

ansible.builtin.debug:

msg: "{{ lookup('community.hashi_vault.vault_kv1_get', 'hello', url='https://vault:8201') }}"

After (hashicorp.vault)

- name: Retrieve a secret from the Vault

ansible.builtin.debug:

msg: "{{ lookup('hashicorp.vault.kv1_secret_get',

secret='hello',

url='https://myvault_url:8201') }}"

2.3.2. Configuring KV2 modules

If you are using KV2 with community.hashi_vault collection, configure the corresponding modules in the hashicorp.vault collection.

Configuring the hashicorp.vault.kv2_secret module

The hashicorp.vault.kv2_secret module performs Create, Update, and Delete (CRUD) operations on KV2 secrets through a unified interface.

The corresponding community.hashi_vault modules are:

-

community.hashi_vault.vault_kv2_write- Write KV2 secrets. -

community.hashi_vault.vault_kv2_delete- Delete KV2 secrets.

-

Install the Ansible Automation Platform certified

hashicorp.vaultcollection.

-

Replicate your automation tasks from both of the

community.hashi_vaultmodules to the followinghashicorp.vault.kv2_secretparameters. Thehashicorp.vault.kv2_secretparameters are similar tocommunity.hashi_vault.auth_method: description: Authentication method to use type: str choices: [token, approle] default: token required: false cas: description: Perform a check-and-set operation. type: int required: false data: description: KV2 secret data to write. type: dict required: true engine_mount_point: description: The path where the secret backend is mounted. type: str default: secret required: false aliases: [secret_mount_path] namespace: description: Vault namespace where secrets reside. type: str default: admin aliases: [vault_namespace] path: description: Vault KVv2 path to be written to. type: str required: true aliases: [secret_path] url: description: URL of the Vault service type: str aliases: [vault_address] required: true versions: description: One or more versions of the secret to delete. type: list of int required: false state: description: Desired state of the secret type: str choices: [present, absent] default: present

-

You must add the

stateparameter to thehashicorp.vault.kv2_secretmodule, as shown above. Valid options are:-

present: This is the equivalent ofcreateorupdatein thecommunity.hashi_vault.vault_kv2modules. -

absent: This is the equivalent ofdelete secretin thecommunity.hashi_vault.vault_kv2modules.

-

Configuring the hashicorp.vault.kv2_secret_info module

The hashicorp.vault.kv2_secret_info module reads KV2 secrets.

The corresponding community.hashi_vault module is:

-

community.hashi_vault.vault_kv2_get: Gets secrets from the HashiCorp Vault KV version 2 secret store.

-

Replicate the

community.hashi_vaultmodules to the followinghashicorp.vault.kv2_secret_infoparameters.engine_mount_point: description: KV secrets engine mount point. default: secret type: str aliases: [secret_mount_path] path: description: Path to the secret. required: true type: str aliases: [secret_path] version: description: The version to retrieve. type: int extends_documentation_fragment: - hashicorp.vault.vault_auth.modules -

Configure the required parameters:

-

path: The path where the secret is located in the community.hashi_vault.hashi_vaultmodules. Alias:secret_path -

url: Maps tourlin thecommunity.hashi_vault.hashi_vaultmodules. Uses the same aliases asvault_address.

-

-

If needed, configure the optional parameters.

Configuring the hashicorp.vault.kv2_secret_get lookup plugin

The hashicorp.vault.kv2_secret_get lookup plugin module reads KV2 secrets.

The corresponding community.hashi_vault modules are:

-

community.hashi_vault.hashi_vault: Retrieves secrets from HashiCorp Vault. -

community.hashi_vault.vault_kv2_getlookup: Gets secrets from the HashiCorp Vault KV version 2 secret store.

-

Replicate the

community.hashi_vaultmodules to the followinghashicorp.vault.kv2_secret_getparameters.auth_method: description: Authentication method to use type: str choices: [token, approle] default: token required: false mount_point: description: Vault mount point type: str required: false aliases: [secret_mount_path] namespace: description: Vault namespace where secrets reside. type: str default: admin aliases: [vault_namespace] secret: description: Vault path to the secret being requested in the format path[:field] type: str required: true aliases: [secret_path] url: description: URL of the Vault service type: str aliases: [vault_address] required: true version: description: Specifies the version to return. If not set the latest is returned. type: int required: false

-

Use the following guidance to configure the

hashicorp.vault.kv2_secret_getparameters:-

auth_method: Maps identically toauth_methodin thecommunity.hashi_vault.hashi_vaultmodules. -

mount_point: Maps tomount_pointin thecommunity.hashi_vault.hashi_vaultmodules. Alias:secret_mount_path. -

namespace: Maps tonamespacein thecommunity.hashi_vault.hashi_vaultmodules. Alias:vault_namespace. -

secret: Maps tosecretin thecommunity.hashi_vault.hashi_vaultmodules. -

url: Maps tourlin thecommunity.hashi_vault.hashi_vaultmodules. Uses the same aliases asvault_address. -

version: Maps identically toversionin thecommunity.hashi_vault.hashi_vaultmodules.

-

Migration examples for the hashicorp.vault.kv2_secret module

The following examples show basic before and after configurations for the hashicorp.vault.kv2_secret module.

|

Note

|

KV2 delete operations are |

Example 1: Basic Secret Write/Create

Before (community.hashi_vault):

- name: Write/create a secret

community.hashi_vault.vault_kv2_write:

url: https://vault:8200

path: hello

data:

foo: bar

After (hashicorp.vault):

- name: Write/create a secret

hashicorp.vault.kv2_secret:

url: https://vault:8200

path: hello

data:

foo: bar

Example 2: Basic Secret Delete

Before (community.hashi_vault):

- name: Delete the latest version of the secret/mysecret secret.

community.hashi_vault.vault_kv2_delete:

url: https://vault:8201

path: secret/mysecret

After (hashicorp.vault):

- name: Delete the latest version of the secret/mysecret secret.

hashicorp.vault.kv2_secret:

url: https://vault:8201

path: secret/mysecret

state: absent

Example 3: Secret Delete - specific version

Before (community.hashi_vault):

- name: Delete versions 1 and 3 of the secret/mysecret secret.

community.hashi_vault.vault_kv2_delete:

url: https://vault:8201

path: secret/mysecret

versions: [1, 3]

After (hashicorp.vault):

- name: Delete versions 1 and 3 of the secret/mysecret secret.

hashicorp.vault.kv2_secret:

url: https://vault:8201

path: secret/mysecret

versions: [1, 3]

state: absent

Migration examples for the hashicorp.vault.kv2_secret_info module

The following examples show before and after configurations for the hashicorp.vault.kv2_secret_info module.

Example 1: Read a secret with token authentication

Before (community.hashi_vault)

- name: Read the latest version of a kv2 secret from Vault community.hashi_vault.vault_kv2_get:

url: https://vault.example.com:8200

token: "{{ vault_token }}"

path: myapp/config

register: response

After (hashicorp.vault)

- name: Read a secret with token authentication

hashicorp.vault.kv2_secret_info:

url: https://vault.example.com:8200

token: "{{ vault_token }}"

path: myapp/config

Example 2: Read a secret with a specific version

Before (community.hashi.vault)

- name: Read version 5 of a secret from kv2

community.hashi_vault.vault_kv2_get:

url: https://vault.example.com:8200

path: myapp/config

version: 5

After (hashicorp.vault)

- name: Read a secret with a specific version

hashicorp.vault.kv2_secret_info:

url: https://vault.example.com:8200

path: myapp/config

version: 1

Migration examples for the hashicorp.vault.kv2_secret_get lookup

The following example shows the KV2 secret get lookup for retrieving the latest version.

Example:

Before (community.hashi_vault)

- name: Return latest KV v2 secret from path

ansible.builtin.debug:

msg: "{{ lookup('community.hashi_vault.hashi_vault', 'secret=secret/data/hello

token=my_vault_token

url=http://myvault_url:8200') }}"

After (hashicorp.vault)

name: Return latest KV v2 secret from path

ansible.builtin.debug:

msg: "{{ lookup('hashicorp.vault.kv2_secret_get', 'secret=secret/data/hello

url=http://myvault_url:8200') }}"